Scenario: Truncate Table before loading the data.However if the loading fails rollback the truncate.If loading succeeds commit transaction.

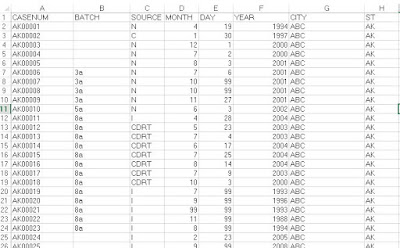

Data Source: Excel File

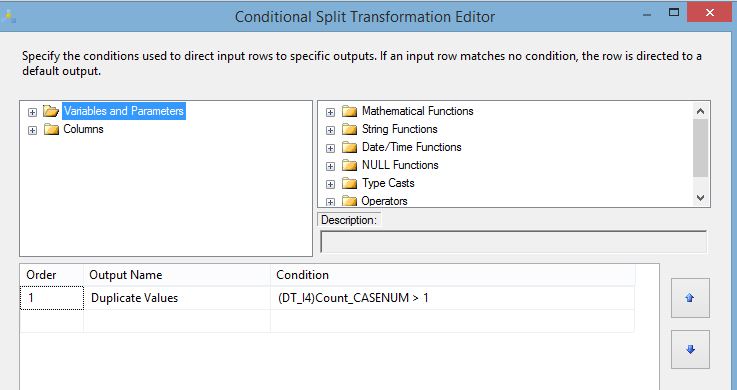

OLEDB Destination:

A normal package to truncate case_details and load the data from the source file would look like:

However in the event the load fails sql command is still executed.

Hence a normal package with SQL COMMAND & DATA FLOW TASK will not work.

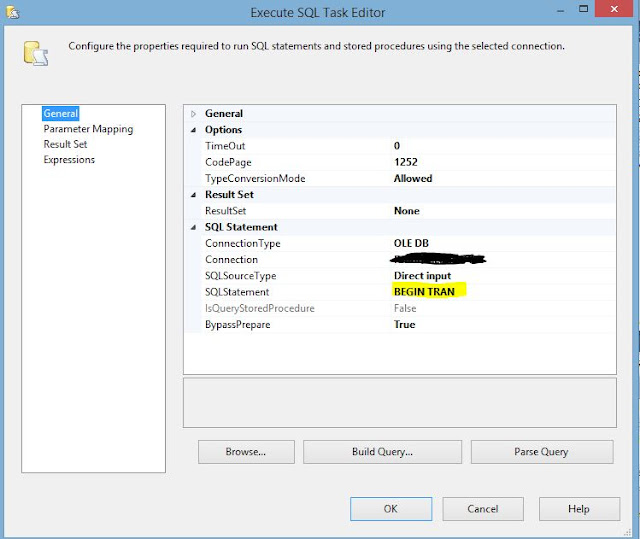

In such a situation wherein we need to rollback/commit tasks BEGIN TRAN & CONTAINER need to be used

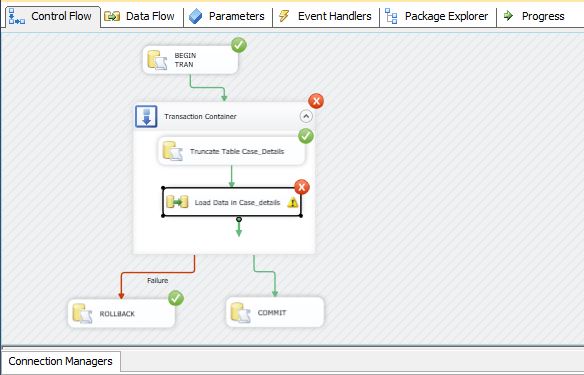

Resolved Package:

First TASK is SQL COMMAND TASK connected to container

The Transaction Container has precedence constraint on success to SQL COMMAND TASK(COMMIT)

.It has precedence constraint on failure to SQL COMMAND TASK(ROLLBACK)

For the successful execution of package set the Trueproperty of OLEDB Connection Manager as TRUE

On Execution:

If loading is successful the transaction commits

If loading fails the transaction rollbacks