In a real time scenario there can be multiple data files which are used to load data in staging tables and then need to be archived.Archival of data files can be done by using 2 control flow tasks

For-each-Loop

File System Task.

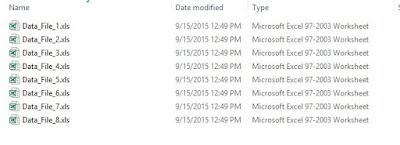

Source Files:

Package:First let's create a user variable file name .Now Add a For-Each-Loop Container Control Task to your package design.

Next Step is configuration of for each loop container.Since we will loop through files select the for each file enumerator

Select the source folder,file type.Since we only require the file name hence select name only.If the source directory consists of subfolders that have files that need to be archived select travserse subfolders.

In the variable mapping select the user variable file name with an index 0.With each occurence of file in the loop the name of the file will be stored in the user variable.

After configuring the for each loop containers let's add a File System Task

Configuration of File System Task:

Create a File Connection Manager for Destination Directory

If delayvalidation property of the file system task is set to false the task shows an error "variable user::filename is used as a source or destination and is empty."It recieves this error as the variable filename gets its value only on the execution of for each loop.

Hence set the delayvalidation property to true.

Before execution of package

Source Directory:

Destination Directory: